Simulation Experiments

Ablation Experiments

(playing speed: 2x)

The final policy

The first stage reward-only

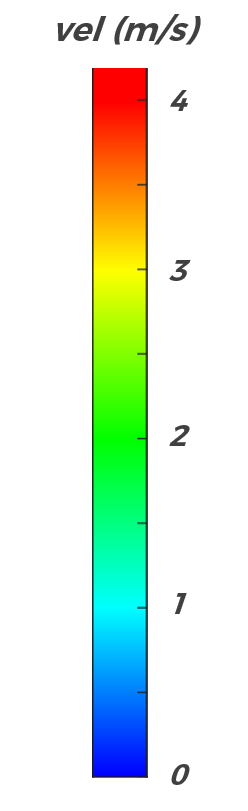

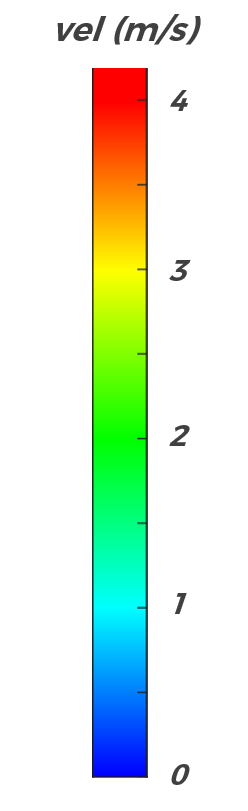

v† = 2 m/s

Benchmark Experiments

Our method

EVA-planner

Animals learn to adapt agility of their movements to their capabilities and the environment they operate in. Mobile robots should also demonstrate this ability to combine agility and safety. The aim of this work is to endow flight vehicles with the ability of agility adaptation in prior unknown and partially observable cluttered environments. We propose a hierarchical learning and planning framework where we utilize both trial and error to comprehensively learn an agility policy with the vehicle's observation as the input, and well-established methods of model-based trajectory generation. Technically, we use online model-free reinforcement learning and a pre-training-fine-tuning reward scheme to obtain the deployable policy. The statistical results in simulation demonstrate the advantages of our method over the constant agility baselines and an alternative method in terms of flight efficiency and safety. In particular, the policy leads to intelligent behaviors, such as perception awareness, which distinguish it from other approaches. By deploying the policy to hardware, we verify that these advantages can be brought to the real world.

The final policy

The first stage reward-only

v† = 2 m/s

Our method

EVA-planner

v† = 2.5 m/s

v† = 3.5 m/s

proposed method

@misc{zhao2024learning,

title={Learning Agility Adaptation for Flight in Clutter},

author={Guangyu Zhao and Tianyue Wu and Yeke Chen and Fei Gao},

year={2024},

eprint={2403.04586},

archivePrefix={arXiv},

primaryClass={cs.RO}

}